1 Hadoop的HDFS架构

1.1 Hadoop HDFS的简介

– HDFS是一种分布式文件系统

– HDFS是针对商业硬件而设计

– HDFS具有高度的容错能力,能适应低廉的硬件环境

– HDFS能为大型数据集的应用程序提供高吞吐量的读写访问

– HDFS使用JAVA构建(所以具有良好的跨平台能力)

1.2 Hadoop HDFS的功能

– 自动硬件故障处理,HDFS能自动检测硬件的故障并自动恢复

– 提供流式数据访问,HDFS为应用程序提供数据的流式访问,能提供高吞吐量的数据访问

– 大型的数据集合,HDFS通过数百甚至上千个节点为应用程序提供千字节或太字节大小的文件读写能力

– 简化黏结数据模型,HDFS通过一次性写入多次访问的方式提高读写效率(只允许末尾添加删除,不能定点修改)

– 智能运算数据节点,以就近数据节点的原则分配就近的数据节点,减少数据在网络内的交互

– 跨异构平台设计,HDFS能适应从一个平台移植到另外一个平台

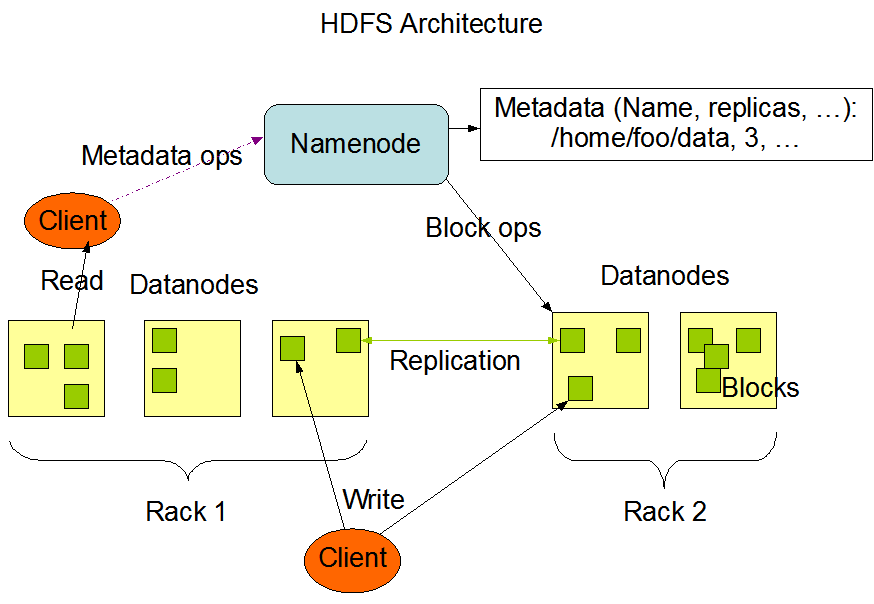

1.3 Hadoop HDFS的架构

– HDFS具有主从架构,即名称节点(即NameNode,为主节点)与数据节点(即DataNode,为从节点)

– 名称节点为客户端提供文件的命令空间(可理解成文件目录结构)和响应读写请求(即为客户端找到实际存储数据的节点)

– 数据节点为客户端提供读写数据的存储(即数据块的存储节点)

1.4 Hadoop HDFS的命令空间

– HDFS通过命名空间实现文件的分层管理(目录树管理,与大多数的文件系统类似)

– HDFS暂时不支持硬连接或软连接

– HDFS的命令空间由NameNode负责维护

– HDFS运行应用程序指定文件的副本数(又称复制因子)

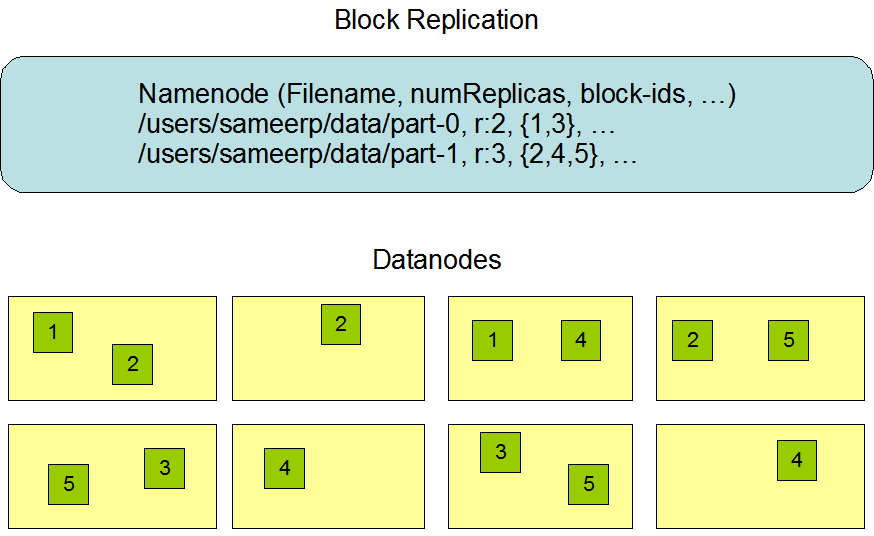

1.5 Hadoop HDFS的数据复制

1.5.1 HDFS数据复制的概念

– HDFS提供存储大文件的能力,该能力源于将大文件切分文大小相同的小数据块并将其分布到不同的数据节点存储实现

– HDFS具有容错的能力,该能力是通过复制切分的小数据块然后将相同的数据块分布到不同的数据节点存储实现

– HDFS数据块的大小和复制因子的数量可通过定义配置文件参数的方式传递变量到函数实现(参数支持更改)

– HDFS文件是一次性写入的(任何时候只有一个写入器)

– HDFS通过定期从数据节点接收Heartbeat(意味节点运行正常)和Blockreport(包含数据节点的所有块列表)监测数据节点

1.5.2 HDFS的节点分布

– 因数据复制与分割而产生的数据可靠性与性能是HDFS的重中之重

– HDFS支持通过机架感知与副本放置策略来提高数据的可靠性、可用性和网络吞吐性能

– HDFS的集群通常分布于多个不同机架上,不同机架的数据节点通过交换机交互数据

– 通常情况下,同一机架的网络吞吐性能会比不同机架的高

– 名称节点通过配置机架感知功能确定每个数据节点的机架ID

– 简单而优良的做法是将不同的数据节点分布到不同的机架以防止单个机架的故障而丢失数据(会增加写入成本)

1.5.3 HDFS的安全模式

– 名称节点在启动时,会进入安全模式(Safemode)状态

– 处于安全模式状态下,不会进行数据块的复制

– 名称节点从数据节点接收心跳和数据块信息报告

– 每个数据块都有指定的最小副本数量

– 当名称节点检入该数据块的最小副本数量时,会认为该数据块是安全复制的

– 当名称节点检入可配置百分比的安全复制数据块30秒后,名称节点退出安全模式状态

– 当名称节点确认有少于指定数量副本的数据块列表,则将这些块复制到其他的名称节点

1.6 工作原理

1.6.1 HDFS的名称节点工作原理

– HDFS的名称空间由名称节点存储

– 名称节点使用EditLog的事务日志来持久记录文件系统元数据的变更(新建文件、变更复制因子等都会记录)

– EditLog存储于名称节点的本机文件系统

– 整个文件系统的命名空间会被保存在本机文件系统中,文件名称为FsImage

– 整个文件系统的命名空间与Blokmap的镜像同时会保存在内存中(大约需要4GB的内存)

– 内存中的命名空间由名称节点启动时从FsImage中加载(加载前,名称节点会先将EditLog的事务更新到FsImage中)

– 名称节点会将EditLog的事务更新到FsImage中后,会截断就的EditLog(此过程称为设置检查点)

1.6.2 HDFS的数据节点工作原理

– 数据节点将HDFS数据分布到不同的数据节点的本地文件系统

– HDFS的数据块被存储到本地文件系统的单独文件中

– 由于本地文件系统单个目录支持的文件数量有限,所以数据节点会通过将数据文件分布到不同的子文件夹来处理

– 当数据节点启动时,进程会通过扫描本地文件系统并生成每个本地文件夹对应的HDFS数据块列表

– HDFS数据块列表会被发送到名称节点(即Blockreport)

1.7 HDFS的通讯协议

– HDFS的所有通讯协议都是基于TCP/IP协议

– 客户端与名称节点通讯使用Client Protocol

– 数据节与名称节点通讯使用DataNode Protocol

– RPC(Remote Procedure Call)为Client Protocol和DataNode Protocol的抽象封装

– 名称节点永远不会启用RPC协议,他只会响应有数据节点或客户端发出的RPC请求

1.8 HDFS的容错机制

1.8.1 故障的类型

– 名称节点故障

– 数据节点故障

– 网络区域故障

1.8.2 数据节点的容错机制

– 每个数据节点通过定期向名称节点发送心跳消息,如果心跳消息异常,则数据节点被标记为死亡并被停止接收任何数据

– 当名称节点检查到复制因子低于指定的阀值,将启动修复机制将低于阀值的数据块分布到其他新的数据节点

– 当某个数据节点的硬盘损坏,重新复制机制将会被启动

1.8.3 数据节点的重新平衡

– 当某个数据节点的可用空间低于某个阀值

– 则第一某个阀值的数据节点数据可能会被自动迁移到另外一个数据节点

– 另外,未来可能实现当特定文件的需求增加,集群可自动通过增加文件的副本数量来自动平衡对文件的要求

1.8.4 数据节点的完整性

– HDFS的客户端软件可通过计算文件的每个块的校验和的方式来确保数据的完整性

– 数据块的校验和可存储在HDFS的同一个命名空间的单独隐藏文件中

– HDFS的客户端检索文件内容是,可通过校验数据块的校验是否与之前的匹配

– 如果不匹配,客户端将从具有完整块的数据节点中重新检索此块

注:存储设备故障、网络故障、软件缺陷可能UI导致数据块的损坏

1.8.5 名称节点的完整性检

– 名称节点可通过配置FsImage和EditLog的多个副本方式保证名称节点的完整性

– FsImage和EditLog的任何更新都会在多个副本之间自动同步(可能会有性能损失)

1.8.6 快照

– 快照可记录特定时刻的存储数据副本

– 快照允许将损坏的HDFS实例的数据回滚到已知的良好时间点

– 快照功能将会在未来版本实现

1.9 HDFS的数据处理机制

1.9.1 数据块的处理机制

– HDFS用于非常大的文件处理

– HDFS兼容于处理大型数据集的应用程序

– HDFS的数据是通过一次性写入并多次读取(流模式读取)

– HDFS的典型数据块大小为64MB,所以文件被切割的块一般为64MB

– HDFS的块一般被分布到不同的数据节点

1.9.2 文件的处理机制

– 当客户端创建一个文件写入请求,该请求不会立刻到达名称节点

– 客户端上的HDFS客户端首选会将该文件缓存到临时的本地文件夹

– 当本地文件累积超过一个HDFS数据块大小时,客户端才会将请求发送到名称节点

– 名称节点收到请求后,会将文件的名字插入文件系统的层次结构中并为数据块分布存储节点

– 名称节点通过返回数据节点和目标数据块的标识响应客户端的请求

– 客户端将数据块从本地临时文件刷新到指定的数据节点

– 关闭文件时,临时本地文件中的剩余未刷新的数据将根据名称节点的返回传输到指定的数据节点

– 客户端将通告名称节点文件已经关闭,则名称节点将文件创建安操作提高的持久的永久性存储中

– 如果名称节点在文件关闭之前死亡,则文件将丢失

注:以上是使用客户端作为文件发送到分布式存储的缓存

1.9.3 数据节点的流水线复制

– 客户端将数据写入HDFS文件系统时,数据首先被缓存到本地文件系统

– 假设HDFS文件的复制因子为3

– 当本地文件被累积到一个数据块大小时,客户端从名称节点检索数据节点的列表

– 名称节点返回的数据节点列表包含将要承载块副本的数据节点

– 然后,客户端将数据块刷新到第一个数据节点

– 第一个数据节点开始收数据小的数据分块,并将每个数据分块存储到本地文件系统同时也将这些分块传输到第二个数据节点

– 第二个数据节点接收每个数据分块,将这些数据存储到本地文件系统同时也将这些数据传输到第三个数据节点

注:数据节点支持从管道的前一个数据节点接收数据的同时可将数据转发到管道的下一个数据节点,被称为数据节点的流水线复制

1.10 HDFS的操作接口

1.10.1 Java API

HDFS为应用程序提供File System Java API

1.10.2 FS Shell

hadoop dfs -mkdir /foodir hadoop dfs -rmr /foodir hadoop dfs -cat /foodir/myfile.txt

1.10.3 DFSAdmin

hadoop dfsadmin -safemode enter hadoop dfsadmin -report hadoop dfsadmin -refreshNodes

注:以上命令只有管理员可用

1.10.4 浏览器接口

HDFS提供Web服务操作HDFS的命名空间并查看其文件内容

1.11 HDFS的空间回收

1.11.1 文件的删除

– 当用户或应用程序删除文件时,HDFS会将删除的文件移动到“/trash”目录下

– 位于“/trash”目录下保留的文件有一定的生命周期,到期后文件将被实际删除(实际释放与文件关联的块)

注:需要注意文件删除时间可能与可用空间的时间延迟问题

1.11.2 文件的恢复

– 用户可在位于“/trash”目录恢复尚未到期释放的文件

– 另外可以通过定义“core-site.xml”配置文件的“fs.trash.interval”参数定义自动删除的间隔时间(0表示直接删除)

注:需要注意文件删除时间可能与可用空间的时间延迟问题

1.11.3 复制因子减少删除

– 当文件复制因子减少时,名称节点将删除多域的副本

– 当下一个心跳信号将删除信息传递到数据节点时,数据节点将删除响应的块,并在集群中显式可用空间

2 Hadoop的配置指南

2.1 配置的目标

本章将以上一章节为基础,部署Apache Hadoop完全分布模式,该模式支持几个以上的上千的节点集群。

2.2 配置条件

本章以单节点设置为基础,如果你还不了解什么是Hadoop的单节点部署,请参阅下文,

https://www.cmdschool.org/archives/5507

2.3 集群的角色

2.3.1 HDFS守护进程

– NameNode(主节点)

– SecondaryNameNode(主节点)

– DataNode(从节点)

2.3.2 YARN守护进程

– ResourceManager(主节点)

– NodeManager(从节点)

– Web App Proxy Server

2.3.3 MapReduce守护进程

– MapReduce Job History server

2.4 Hadoop的配置文件

2.4.1 只读配置文件

${HADOOP_HOME}/share/doc/hadoop/hadoop-project-dist/hadoop-common/core-default.xml

${HADOOP_HOME}/share/doc/hadoop/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

${HADOOP_HOME}/share/doc/hadoop/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

${HADOOP_HOME}/share/doc/hadoop/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml

2.4.2 节点配置文件

${HADOOP_HOME}/etc/hadoop/core-site.xml

${HADOOP_HOME}/etc/hadoop/hdfs-site.xml

${HADOOP_HOME}/etc/hadoop/yarn-site.xml

${HADOOP_HOME}/etc/hadoop/mapred-site.xml.template

上一个章节我们使用过“core-site.xml”和“hdfs-site.xml”进行配置,以下是各个配置文件需要配置的参数建议,

| Parameter | Value | Notes |

|---|---|---|

| fs.defaultFS | NameNode URI | hdfs://host:port/ |

| io.file.buffer.size | 131072 | Size of read/write buffer used in SequenceFiles. |

| Parameter | Value | Notes |

|---|---|---|

| dfs.namenode.name.dir | Path on the local filesystem where the NameNode stores the namespace and transactions logs persistently. | If this is a comma-delimited list of directories then the name table is replicated in all of the directories, for redundancy. |

| dfs.namenode.hosts / dfs.namenode.hosts.exclude | List of permitted/excluded DataNodes. | If necessary, use these files to control the list of allowable datanodes. |

| dfs.blocksize | 268435456 | HDFS blocksize of 256MB for large file-systems. |

| dfs.namenode.handler.count | 100 | More NameNode server threads to handle RPCs from large number of DataNodes. |

| Parameter | Value | Notes |

|---|---|---|

| dfs.datanode.data.dir | Comma separated list of paths on the local filesystem of a DataNode where it should store its blocks. | If this is a comma-delimited list of directories, then data will be stored in all named directories, typically on different devices. |

| Parameter | Value | Notes |

|---|---|---|

| yarn.acl.enable | true / false | Enable ACLs? Defaults to false. |

| yarn.admin.acl | Admin ACL | ACL to set admins on the cluster. ACLs are of for comma-separated-usersspacecomma-separated-groups. Defaults to special value of * which means anyone. Special value of just space means no one has access. |

| yarn.log-aggregation-enable | false | Configuration to enable or disable log aggregation |

| Parameter | Value | Notes |

|---|---|---|

| yarn.resourcemanager.address | ResourceManager host:port for clients to submit jobs. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.scheduler.address | ResourceManager host:port for ApplicationMasters to talk to Scheduler to obtain resources. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.resource-tracker.address | ResourceManager host:port for NodeManagers. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.admin.address | ResourceManager host:port for administrative commands. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.webapp.address | ResourceManager web-ui host:port. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.hostname | ResourceManager host. | host Single hostname that can be set in place of setting all yarn.resourcemanager*address resources. Results in default ports for ResourceManager components. |

| yarn.resourcemanager.scheduler.class | ResourceManager Scheduler class. | CapacityScheduler (recommended), FairScheduler (also recommended), or FifoScheduler |

| yarn.scheduler.minimum-allocation-mb | Minimum limit of memory to allocate to each container request at the Resource Manager. | In MBs |

| yarn.scheduler.maximum-allocation-mb | Maximum limit of memory to allocate to each container request at the Resource Manager. | In MBs |

| yarn.resourcemanager.nodes.include-path / yarn.resourcemanager.nodes.exclude-path | List of permitted/excluded NodeManagers. | If necessary, use these files to control the list of allowable NodeManagers. |

| Parameter | Value | Notes |

|---|---|---|

| yarn.nodemanager.resource.memory-mb | Resource i.e. available physical memory, in MB, for given NodeManager | Defines total available resources on the NodeManager to be made available to running containers |

| yarn.nodemanager.vmem-pmem-ratio | Maximum ratio by which virtual memory usage of tasks may exceed physical memory | The virtual memory usage of each task may exceed its physical memory limit by this ratio. The total amount of virtual memory used by tasks on the NodeManager may exceed its physical memory usage by this ratio. |

| yarn.nodemanager.local-dirs | Comma-separated list of paths on the local filesystem where intermediate data is written. | Multiple paths help spread disk i/o. |

| yarn.nodemanager.log-dirs | Comma-separated list of paths on the local filesystem where logs are written. | Multiple paths help spread disk i/o. |

| yarn.nodemanager.log.retain-seconds | 10800 | Default time (in seconds) to retain log files on the NodeManager Only applicable if log-aggregation is disabled. |

| yarn.nodemanager.remote-app-log-dir | /logs | HDFS directory where the application logs are moved on application completion. Need to set appropriate permissions. Only applicable if log-aggregation is enabled. |

| yarn.nodemanager.remote-app-log-dir-suffix | logs | Suffix appended to the remote log dir. Logs will be aggregated to ${yarn.nodemanager.remote-app-log-dir}/${user}/${thisParam} Only applicable if log-aggregation is enabled. |

| yarn.nodemanager.aux-services | mapreduce_shuffle | Shuffle service that needs to be set for Map Reduce applications. |

| Parameter | Value | Notes |

|---|---|---|

| yarn.log-aggregation.retain-seconds | -1 | How long to keep aggregation logs before deleting them. -1 disables. Be careful, set this too small and you will spam the name node. |

| yarn.log-aggregation.retain-check-interval-seconds | -1 | Time between checks for aggregated log retention. If set to 0 or a negative value then the value is computed as one-tenth of the aggregated log retention time. Be careful, set this too small and you will spam the name node. |

| Parameter | Value | Notes |

|---|---|---|

| mapreduce.framework.name | yarn | Execution framework set to Hadoop YARN. |

| mapreduce.map.memory.mb | 1536 | Larger resource limit for maps. |

| mapreduce.map.java.opts | -Xmx1024M | Larger heap-size for child jvms of maps. |

| mapreduce.reduce.memory.mb | 3072 | Larger resource limit for reduces. |

| mapreduce.reduce.java.opts | -Xmx2560M | Larger heap-size for child jvms of reduces. |

| mapreduce.task.io.sort.mb | 512 | Higher memory-limit while sorting data for efficiency. |

| mapreduce.task.io.sort.factor | 100 | More streams merged at once while sorting files. |

| mapreduce.reduce.shuffle.parallelcopies | 50 | Higher number of parallel copies run by reduces to fetch outputs from very large number of maps. |

| Parameter | Value | Notes |

|---|---|---|

| mapreduce.jobhistory.address | MapReduce JobHistory Server host:port | Default port is 10020. |

| mapreduce.jobhistory.webapp.address | MapReduce JobHistory Server Web UI host:port | Default port is 19888. |

| mapreduce.jobhistory.intermediate-done-dir | /mr-history/tmp | Directory where history files are written by MapReduce jobs. |

| mapreduce.jobhistory.done-dir | /mr-history/done | Directory where history files are managed by the MR JobHistory Server. |

2.4.3 环境变量和配置参数

${HADOOP_HOME}/etc/hadoop/hadoop-env.sh

${HADOOP_HOME}/etc/hadoop/yarn-env.sh

默认情况下,应当为程序执行以下环境变量,

– JAVA_HOME

– HADOOP_PID_DIR

– HADOOP_SECURE_DN_PID_DIR

– HADOOP_LOG_DIR / YARN_LOG_DIR

– HADOOP_HEAPSIZE / YARN_HEAPSIZE

| Daemon | Environment Variable |

|---|---|

| NameNode | HADOOP_NAMENODE_OPTS |

| DataNode | HADOOP_DATANODE_OPTS |

| Secondary NameNode | HADOOP_SECONDARYNAMENODE_OPTS |

| ResourceManager | YARN_RESOURCEMANAGER_OPTS |

| NodeManager | YARN_NODEMANAGER_OPTS |

| WebAppProxy | YARN_PROXYSERVER_OPTS |

| Map Reduce Job History Server | HADOOP_JOB_HISTORYSERVER_OPTS |

| Daemon | Environment Variable |

|---|---|

| ResourceManager | YARN_RESOURCEMANAGER_HEAPSIZE |

| NodeManager | YARN_NODEMANAGER_HEAPSIZE |

| WebAppProxy | YARN_PROXYSERVER_HEAPSIZE |

| Map Reduce Job History Server | HADOOP_JOB_HISTORYSERVER_HEAPSIZE |

2.5 Hadoop的机架感知

2.5.1 机架感知的概念

– NameNode和ResourceManager通过管理员配置的模块调用API获取并解析集群中的从节点机架信息

– HDFS和YARN组件具有机架感知功能

– API可将DNS名称(也指IP地址)解析为机架ID

2.5.2 机架感知的相关配置

“core-default.xml”里面提供以下参数用于配置机架感知功能,

– topology.node.switch.mapping.impl参数用于指定特定的节点模块

– topology.script.file.name参数用于指定特定的脚本或命令

– topology.script.file.name参数如果没有配置,则会为传递的IP地址返回“rack id”或“default-rack”

2.6 Hadoop的监控

2.6.1 监控的概念

– Hadoop提供一种监控机制,允许管理员通过NodeManager定期运行管理脚本来检查各节点的状态

2.6.2 监控的机制

– 管理员通过脚本检查各节点的状态,如果检查到节点异常,则以标准输出的形式输出以ERROR开头的字符串

– NodeManager脚本定期检查输出,如发现ERROR开头的字符串,则ResourceManager将节点拉黑(不再分配任务给节点)

– NodeManager脚本持续监控,如发现节点状态正常,则ResourceManager将节点从黑名单移除(再此分配任务给节点)

注:ResourceManager的Web界面可查询节点的健康状态

2.6.3 节点状态监控配置文件yarn-site.xml

| Parameter | Value | Notes |

|---|---|---|

| mapreduce.framework.name | yarn | Execution framework set to Hadoop YARN. |

| mapreduce.map.memory.mb | 1536 | Larger resource limit for maps. |

| mapreduce.map.java.opts | -Xmx1024M | Larger heap-size for child jvms of maps. |

| mapreduce.reduce.memory.mb | 3072 | Larger resource limit for reduces. |

| mapreduce.reduce.java.opts | -Xmx2560M | Larger heap-size for child jvms of reduces. |

| mapreduce.task.io.sort.mb | 512 | Higher memory-limit while sorting data for efficiency. |

| mapreduce.task.io.sort.factor | 100 | More streams merged at once while sorting files. |

| mapreduce.reduce.shuffle.parallelcopies | 50 | Higher number of parallel copies run by reduces to fetch outputs from very large number of maps. |

注:

– 如果只有部分磁盘变坏,则状态脚本不会给出错误

– NodeManager通过持续的定期监控,挡检查nodemanager-local-dirs和nodemanager-log-dirs的错误

– 当目录达到预定的阀值后生成yarn.nodemanager.disk-health-checker.min-healthy-disks

– 达到阀值则整个节点被标记为不健康并信息发送给ResourceManager

2.7 从节点配置文件slaves

– 此文件一行声明一个从设备

– 每行可定义从设备的主机名称和IP地址

另外,我们通常按如下规则区分,

– 运行NameNode与ResourceManager角色的设备被称为主设备

– DataNode与NodeManager角色的设备被称为从设备

2.8 Hadoop的日志记录

2.8.1 日志记录的概念

Hadoop通过Apache Commons Logging框架使用Apache log4进行日志记录

2.8.2 日志记录的定义

${HADOOP_HOME}/etc/hadoop/log4j.properties

注:以上配置文件用于定义Hadoop守护进程的日志记录。

2.9 Hadoop的配置

以上配置请放置于由环境变量“HADOOP_CONF_DIR”定义的文件目录方可生效。

3 最佳实践

3.1 系统环境配置

3.1.1 系统信息

IP Address = 10.168.0.10[1-5]

OS = CentOS 7.x x86_64

Host Name = hd0[1-5].cmdschool.org

详细的角色分布如下,

Apache Hadoop HDFS NameNode(hdfs-nn) = hd01.cmdschool.org

Apache Hadoop HDFS SecondaryNameNode(hdfs-snn) = hd02.cmdschool.org

Apache Hadoop HDFS DataNode(hdfs-snn) = hd0[3-5].cmdschool.org

3.1.2 系统信息

In hd0[1-5],

yum install -y update

3.1.3 安装常用工具

In hd0[1-5],

yum install -y vim wget tree

3.2 软件环境配置

In hd0[1-5],

3.2.1 安装JDK

请参阅以下方法安装jdk-8u121-linux-x64,

https://www.cmdschool.org/archives/397

安装完成后,请使用如下命令检查JDK的安装,

java -version

命令显示如下,

java version "1.8.0_121" Java(TM) SE Runtime Environment (build 1.8.0_121-b13) Java HotSpot(TM) 64-Bit Server VM (build 25.121-b13, mixed mode)

3.2.2 配置名称解析

In hd0[1-5],

echo '10.168.0.101 hd01 hd01.cmdschool.org' >> /etc/hosts echo '10.168.0.102 hd02 hd02.cmdschool.org' >> /etc/hosts echo '10.168.0.103 hd03 hd03.cmdschool.org' >> /etc/hosts echo '10.168.0.104 hd04 hd04.cmdschool.org' >> /etc/hosts echo '10.168.0.105 hd05 hd05.cmdschool.org' >> /etc/hosts

注:以上仅用于测试,生产环境请使用DNS代替

3.2.3 配置管理节点到从节点的root用户公钥认证

In hd01,

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa ssh-copy-id -i ~/.ssh/id_rsa.pub root@hd02 ssh-copy-id -i ~/.ssh/id_rsa.pub root@hd03 ssh-copy-id -i ~/.ssh/id_rsa.pub root@hd04 ssh-copy-id -i ~/.ssh/id_rsa.pub root@hd05

配置完毕后,请使用如下命令测试,

ssh root@hd02 ssh root@hd03 ssh root@hd04 ssh root@hd05

3.2.4 安装rsync

In hd0[1-5],

yum install -y rsync

3.2.5 配置运行用户

In hd0[1-5],

groupadd hadoop groupadd hdfs useradd -g hdfs -G hadoop -d /var/lib/hadoop-hdfs/ hdfs

3.2.6 配置主到从节点的hdfs用户公钥认证

In hd01,

su - hdfs ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys exit ssh hd02 mkdir -p /var/lib/hadoop-hdfs/.ssh/ scp /var/lib/hadoop-hdfs/.ssh/id_rsa.pub hd02:/var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd02 chown hdfs:hdfs -R /var/lib/hadoop-hdfs/.ssh/ ssh hd02 chmod 0600 /var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd03 mkdir -p /var/lib/hadoop-hdfs/.ssh/ scp /var/lib/hadoop-hdfs/.ssh/id_rsa.pub hd03:/var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd03 chown hdfs:hdfs -R /var/lib/hadoop-hdfs/.ssh/ ssh hd03 chmod 0600 /var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd04 mkdir -p /var/lib/hadoop-hdfs/.ssh/ scp /var/lib/hadoop-hdfs/.ssh/id_rsa.pub hd04:/var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd04 chown hdfs:hdfs -R /var/lib/hadoop-hdfs/.ssh/ ssh hd04 chmod 0600 /var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd05 mkdir -p /var/lib/hadoop-hdfs/.ssh/ scp /var/lib/hadoop-hdfs/.ssh/id_rsa.pub hd05:/var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd05 chown hdfs:hdfs -R /var/lib/hadoop-hdfs/.ssh/ ssh hd05 chmod 0600 /var/lib/hadoop-hdfs/.ssh/authorized_keys

配置完成或,请务必使用如下命令测试公钥认证,

su - hdfs ssh hd01 ssh hd02 ssh hd03 ssh hd04 ssh hd05

注:以上,如果不用输入密码即可完成登录,则配置完成。

3.2.7 配置从到主节点的hdfs用户公钥认证

In hd01,

scp /var/lib/hadoop-hdfs/.ssh/id_rsa hd02:/var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd02 chown hdfs:hdfs /var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd02 chmod 0600 /var/lib/hadoop-hdfs/.ssh/id_rsa scp /var/lib/hadoop-hdfs/.ssh/id_rsa hd03:/var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd03 chown hdfs:hdfs /var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd03 chmod 0600 /var/lib/hadoop-hdfs/.ssh/id_rsa scp /var/lib/hadoop-hdfs/.ssh/id_rsa hd04:/var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd04 chown hdfs:hdfs /var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd04 chmod 0600 /var/lib/hadoop-hdfs/.ssh/id_rsa scp /var/lib/hadoop-hdfs/.ssh/id_rsa hd05:/var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd05 chown hdfs:hdfs /var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd05 chmod 0600 /var/lib/hadoop-hdfs/.ssh/id_rsa

配置完成或,务必使用如下命令测试公钥认证,

In hd0[2-5],

su - hdfs ssh hd01

注:以上,如果不用输入密码即可完成登录,则配置完成。

3.3 部署Apache Hadoop软件包

In hd0[1-5],

3.3.1 下载二进制安装包

cd ~ wget https://archive.apache.org/dist/hadoop/common/hadoop-2.6.5/hadoop-2.6.5.tar.gz

其他版本请从以下链接下载,

https://archive.apache.org/dist/hadoop/common/

3.3.2 解压安装包

cd ~ tar -xf hadoop-2.6.5.tar.gz

3.3.3 部署软件包

cd ~ mv hadoop-2.6.5 /usr/ chown hdfs:hdfs -R /usr/hadoop-2.6.5/ chmod 775 -R /usr/hadoop-2.6.5/

3.3.4 创建配置文件的软连接

ln -s /usr/hadoop-2.6.5/etc/hadoop /etc/hadoop

注:以上目的是管理方便

3.4 配置Apache Hadoop软件包

In hd0[1-5],

3.4.1 配置环境变量

vim /etc/profile.d/hadoop.sh

加入如下配置,

export HADOOP_HOME=/usr/hadoop-2.6.5

export HADOOP_PREFIX=${HADOOP_HOME}

export HADOOP_YARN_HOME=${HADOOP_HOME}

export PATH=${HADOOP_HOME}/bin:$PATH

export PATH=${HADOOP_HOME}/sbin:$PATH

export HADOOP_CONF_DIR=/etc/hadoop

export HADOOP_LOG_DIR=/var/log/hadoop-hdfs

export HADOOP_PID_DIR=/var/run/hadoop-hdfs

export HADOOP_MASTER=hd01:${HADOOP_HOME}

export HADOOP_IDENT_STRING=$USER

export HADOOP_NICENESS=0

以上的配置解析如下,请重点理解第九行,

– 第一行声明Hadoop程序的安装目录

– 第二行继承“HADOOP_HOME”的声明

– 第三行继承“HADOOP_HOME”的声明,指示YARN的位置

– 第四行声明执行文件的位置(Hadoop的bin)

– 第五行声明执行文件的位置(Hadoop的sbin)

– 第六行声明Hadoop配置文件的位置

– 第七行声明Hadoop日志文件位置

– 第八行声明HadoopPDI文件的位置

– 第九行声明非主服务器使用rsync从hd01的“/usr/hadoop-2.6.5”目录同步配置(此配置是重点)

– 第十行声明运行的用户(“$USER即指当前用户”)

– 第十一行声明进程的优先级别,默认值“0”

根据声明的目录配置权限,

mkdir -p /var/log/hadoop-hdfs chown hdfs:hdfs /var/log/hadoop-hdfs chmod 775 /var/log/hadoop-hdfs mkdir -p /var/run/hadoop-hdfs chown hdfs:hdfs /var/run/hadoop-hdfs chmod 775 /var/run/hadoop-hdfs

3.4.2 导入环境变量

source /etc/profile.d/hadoop.sh

3.4.3 测试安装部署

hadoop version

可见如下输出,

Hadoop 2.6.5 Subversion https://github.com/apache/hadoop.git -r e8c9fe0b4c252caf2ebf1464220599650f119997 Compiled by sjlee on 2016-10-02T23:43Z Compiled with protoc 2.5.0 From source with checksum f05c9fa095a395faa9db9f7ba5d754 This command was run using /usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5.jar

3.5 配置名称节点(主节点)

In hd01

3.5.1 定义core-site.xml配置文件

cp /etc/hadoop/core-site.xml /etc/hadoop/core-site.xml.default vim /etc/hadoop/core-site.xml

修改如下配置,

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hd01:9000</value>

</property>

</configuration>

注:参数“”定义“hd01”为名称节点

3.5.2 定义hdfs-site.xml配置文件

cp /etc/hadoop/hdfs-site.xml /etc/hadoop/hdfs-site.xml.default vim /etc/hadoop/hdfs-site.xml

修改如下配置,

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/dfs/nn</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>/data/dfs/snn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/dfs/dn</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

注:

– 参数“dfs.namenode.name.dir”定义名称节点的存储位置

– 参数“dfs.namenode.checkpoint.dir”定义第二名称节点的存储位置

– 参数“dfs.namenode.checkpoint.dir”依赖配置文件“/etc/hadoop/hadoop-metrics2.properties”

– 参数“dfs.datanode.data.dir”定义数据节点的存储位置

– 参数“dfs.replication”定义块数据复制的份数

另外,你需要根据配置文件定义数据存储目录,

mkdir -p /data/dfs/nn chown hdfs:hadoop /data/dfs/nn chmod 775 /data/dfs/nn

另外,我们更建议你你授权上一级目录并让“nn”目录自动创建,

mkdir -p /data/dfs chown hdfs:hadoop /data/dfs chmod 775 /data/dfs

3.5.3 格式化名称节点

su - hdfs -c 'hadoop namenode -format'

可见如下提示信息,

DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. 2019-11-19 09:42:02,762 INFO [main] namenode.NameNode (StringUtils.java:startupShutdownMessage(633)) - STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = hd01/10.168.0.101 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.6.5 STARTUP_MSG: classpath = /etc/hadoop:/usr/hadoop-2.6.5/share/hadoop/common/lib/activation1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-net-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hadoop-annotations-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/junit-4.11.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jettison-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/paranamer-2.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-el-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hadoop-auth-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/avro-1.7.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/gson-2.2.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-nfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/activation-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guice-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-api-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-registry-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-client-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.5.jar STARTUP_MSG: build = https://github.com/apache/hadoop.git -r e8c9fe0b4c252caf2ebf1464220599650f119997; compiled by 'sjlee' on 2016-10-02T23:43Z STARTUP_MSG: java = 1.8.0_121 ************************************************************/ 2019-11-19 09:42:02,777 INFO [main] namenode.NameNode (SignalLogger.java:register(91)) - registered UNIX signal handlers for [TERM, HUP, INT] [...] 2019-11-19 09:42:04,523 INFO [Thread-1] namenode.NameNode (StringUtils.java:run(659)) - SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hd01/10.168.0.101 ************************************************************/

注:“[…]”表示省略

3.5.6 启动守护进程

su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh start namenode'

可见如下输出,

starting namenode, logging to /var/log/hadoop-hdfs/hadoop-hdfs-namenode-hd01.cmdschool.org.out 2019-11-19 09:46:25,181 INFO [main] namenode.NameNode (StringUtils.java:startupShutdownMessage(633)) - STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = hd01/10.168.0.101 STARTUP_MSG: args = [] STARTUP_MSG: version = 2.6.5 STARTUP_MSG: classpath = /etc/hadoop:/usr/hadoop-2.6.5/share/hadoop/common/lib/activation-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-net-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hadoop-annotations-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/junit-4.11.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jettison-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/paranamer-2.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-el-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hadoop-auth-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/avro-1.7.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/gson-2.2.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-nfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/activation-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guice-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-api-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-registry-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-client-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.5.jar STARTUP_MSG: build = https://github.com/apache/hadoop.git -r e8c9fe0b4c252caf2ebf1464220599650f119997; compiled by 'sjlee' on 2016-10-02T23:43Z STARTUP_MSG: java = 1.8.0_121 ************************************************************/

可使用如下命令确认程序的启动,

netstat -antp | grep java | grep LISTEN

可见如下输出,

tcp 0 0 10.168.0.101:9000 0.0.0.0:* LISTEN 8796/java tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN 8796/java

测试到这里,请使用以下命令停止服务,

su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh stop namenode'

3.5.7 配置服务控制脚本

vim /usr/lib/systemd/system/hdfs-nn.service

可加入如下配置,

[Unit] Description=Apache HDFS namenode manager Wants=network.target Before=network.target After=network-pre.target Documentation=https://hadoop.apache.org/docs/ [Service] Type=forking ExecStartPre=/bin/sh -c 'mkdir -p /var/run/hadoop-hdfs;chown hdfs:hdfs /var/run/hadoop-hdfs;chmod 775 /var/run/hadoop-hdfs' ExecStartPre=/bin/sh -c 'mkdir -p /var/log/hadoop-hdfs;chown hdfs:hdfs /var/log/hadoop-hdfs;chmod 775 /var/log/hadoop-hdfs' ExecStartPre=/bin/sh -c 'mkdir -p /data/dfs/nn;chown hdfs:hadoop /data/dfs/nn;chmod 775 /data/dfs/nn' ExecStart=/usr/bin/su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh start namenode' ExecStop=/usr/bin/su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh stop namenode' PIDFile=/var/run/hadoop-hdfs/hadoop-hdfs-namenode.pid Restart=on-success [Install] WantedBy=multi-user.target

修改完脚本后,你需要使用如下命令重载服务,

systemctl daemon-reload

你可使用如下命令控制服务和查询状态,

systemctl start hdfs-nn.service systemctl status hdfs-nn.service systemctl stop hdfs-nn.service systemctl restart hdfs-nn.service

测试完毕,建议你使用如下命令设置服务自动启动,

systemctl enable hdfs-nn.service

3.5.8 开放节点的端口

firewall-cmd --permanent --add-port 50070/tcp --add-port 9000/tcp firewall-cmd --reload firewall-cmd --list-all

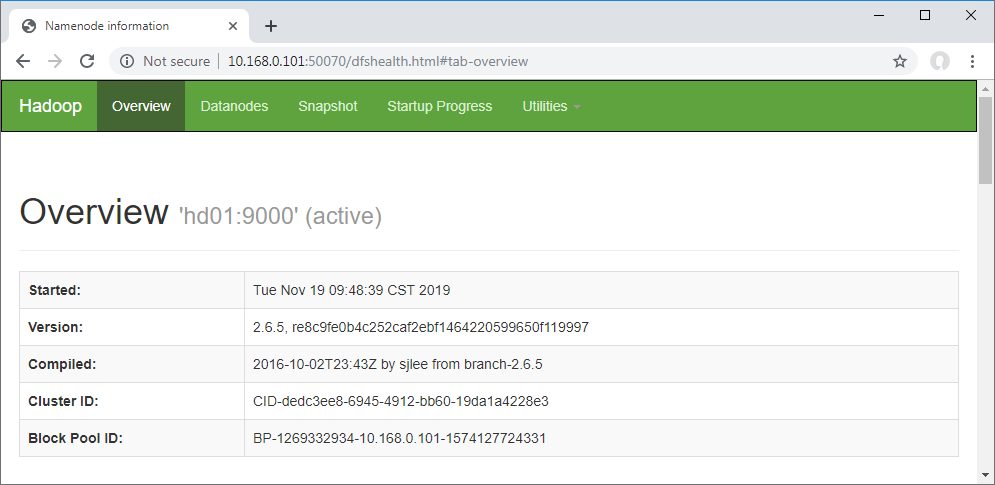

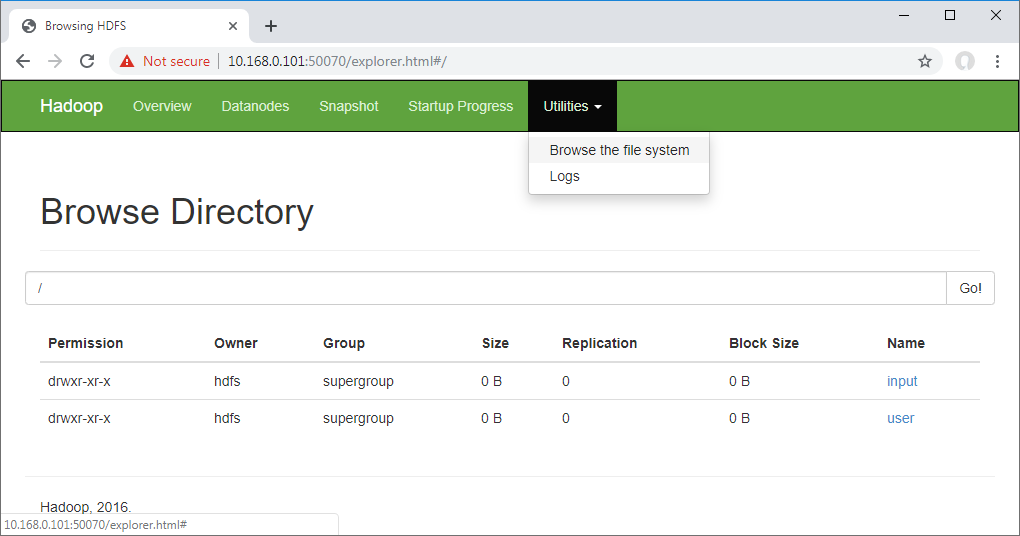

3.5.9 浏览器测试

In Windows Client

http://10.168.0.101:50070/

可见如下显示,

3.6 配置第二名称节点

3.6.1 创建数据存储目录

In hd02

mkdir -p /data/dfs/snn/ chown hdfs:hadoop /data/dfs/snn/ chmod 775 /data/dfs/snn/

另外,我们更建议你你授权上一级目录并让“snn”目录自动创建,

mkdir -p /data/dfs chown hdfs:hadoop /data/dfs chmod 775 /data/dfs

3.6.2 启动守护进程

In hd02

su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh start secondarynamenode'

由于声明环境变量“HADOOP_MASTER”的关系,主节点配置会自动同步到本机,另外可见如下输出,

starting secondarynamenode, logging to /var/log/hadoop-hdfs/hadoop-hdfs-secondarynamenode-hd02.cmdschool.org.out 2019-11-19 09:56:58,403 INFO [main] namenode.SecondaryNameNode (StringUtils.java:startupShutdownMessage(633)) - STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting SecondaryNameNode STARTUP_MSG: host = hd02/10.168.0.102 STARTUP_MSG: args = [] STARTUP_MSG: version = 2.6.5 STARTUP_MSG: classpath = /etc/hadoop:/usr/hadoop-2.6.5/share/hadoop/common/lib/activation-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-net-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hadoop-annotations-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/junit-4.11.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jettison-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/paranamer-2.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-el-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hadoop-auth-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/avro-1.7.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/gson-2.2.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-nfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/activation-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guice-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-api-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-registry-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-client-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.5.jar STARTUP_MSG: build = https://github.com/apache/hadoop.git -r e8c9fe0b4c252caf2ebf1464220599650f119997; compiled by 'sjlee' on 2016-10-02T23:43Z STARTUP_MSG: java = 1.8.0_121 ************************************************************/

可使用如下命令确认程序的启动,

netstat -antp | grep java | grep LISTEN

可见如下输出,

tcp 0 0 0.0.0.0:50090 0.0.0.0:* LISTEN 63253/java

测试到这里,请使用以下命令停止服务,

su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh stop secondarynamenode'

3.6.3 配置服务控制脚本

In hd02

vim /usr/lib/systemd/system/hdfs-snn.service

可加入如下配置,

[Unit] Description=Apache HDFS secondarynamenode manager Wants=network.target Before=network.target After=network-pre.target Documentation=https://hadoop.apache.org/docs/ [Service] Type=forking ExecStartPre=/bin/sh -c 'mkdir -p /var/run/hadoop-hdfs;chown hdfs:hdfs /var/run/hadoop-hdfs;chmod 775 /var/run/hadoop-hdfs' ExecStartPre=/bin/sh -c 'mkdir -p /var/log/hadoop-hdfs;chown hdfs:hdfs /var/log/hadoop-hdfs;chmod 775 /var/log/hadoop-hdfs' ExecStartPre=/bin/sh -c 'mkdir -p /data/dfs/snn;chown hdfs:hadoop /data/dfs/snn;chmod 775 /data/dfs/snn' ExecStart=/usr/bin/su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh start secondarynamenode' ExecStop=/usr/bin/su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh stop secondarynamenode' PIDFile=/var/run/hadoop-hdfs/hadoop-hdfs-secondarynamenode.pid Restart=on-success [Install] WantedBy=multi-user.target

修改完脚本后,你需要使用如下命令重载服务,

systemctl daemon-reload

你可使用如下命令控制服务和查询状态,

systemctl start hdfs-snn.service systemctl status hdfs-snn.service systemctl stop hdfs-snn.service systemctl restart hdfs-snn.service

测试完毕,建议你使用如下命令设置服务自动启动,

systemctl enable hdfs-snn.service

3.6.5 开放节点的端口

In hd02

firewall-cmd --permanent --add-port 50090/tcp firewall-cmd --reload firewall-cmd --list-all

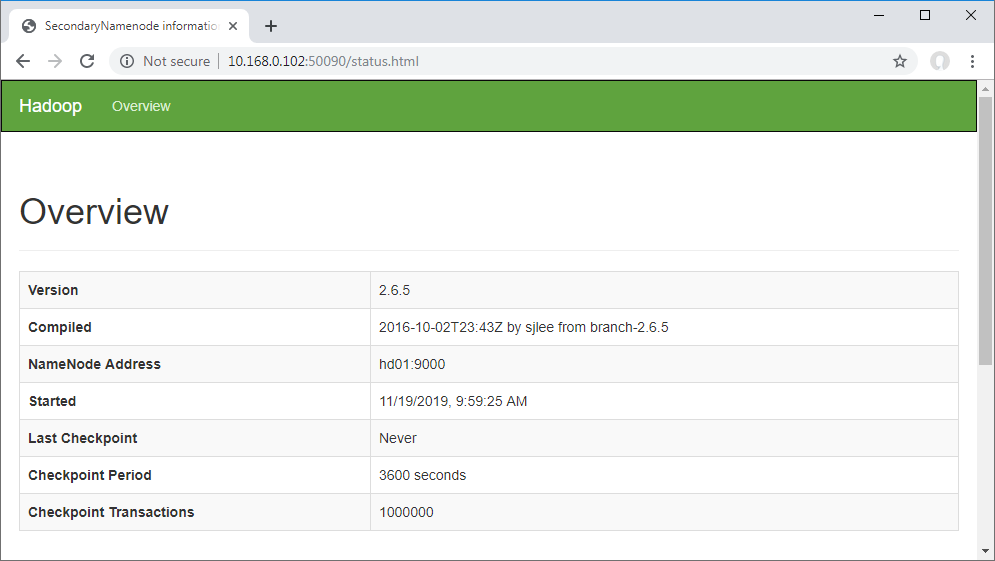

3.6.6 浏览器测试服务

In Windows Client

http://10.168.0.102:50090

可见如下显示,

3.7 配置数据节点

3.7.1 创建数据存储目录

In hd0[3-5]

mkdir -p /data/dfs/dn/ chown hdfs:hadoop /data/dfs/dn/ chmod 775 /data/dfs/dn/

另外,我们更建议你你授权上一级目录并让“dn”目录自动创建,

mkdir -p /data/dfs chown hdfs:hadoop /data/dfs chmod 775 /data/dfs

3.7.2 启动守护进程

In hd0[3-5]

su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh start datanode'

由于声明环境变量“HADOOP_MASTER”的关系,主节点配置会自动同步到本机,另外可见如下输出,

2019-11-19 10:09:15,362 INFO [main] datanode.DataNode (StringUtils.java:startupShutdownMessage(633)) - STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting DataNode STARTUP_MSG: host = hd03/10.168.0.103 STARTUP_MSG: args = [] STARTUP_MSG: version = 2.6.5 STARTUP_MSG: classpath = /etc/hadoop:/usr/hadoop-2.6.5/share/hadoop/common/lib/activation-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-net-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hadoop-annotations-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/junit-4.11.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jettison-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/paranamer-2.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-el-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hadoop-auth-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/avro-1.7.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/gson-2.2.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-nfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/activation-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/guice-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-api-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-registry-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-client-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5-tests.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.5.jar:/usr/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.5.jar STARTUP_MSG: build = https://github.com/apache/hadoop.git -r e8c9fe0b4c252caf2ebf1464220599650f119997; compiled by 'sjlee' on 2016-10-02T23:43Z STARTUP_MSG: java = 1.8.0_121 ************************************************************/

可使用如下命令确认程序的启动,

netstat -antp | grep java | grep LISTEN

可见如下输出,

tcp 0 0 0.0.0.0:50010 0.0.0.0:* LISTEN 11996/java tcp 0 0 0.0.0.0:50075 0.0.0.0:* LISTEN 11996/java tcp 0 0 0.0.0.0:50020 0.0.0.0:* LISTEN 11996/java

测试到这里,请使用以下命令停止服务,

su - hdfs -c '/usr/hadoop-2.6.5/sbin/hadoop-daemon.sh stop datanode'

3.7.3 配置服务控制脚本

In hd0[3-5]

vim /usr/lib/systemd/system/hdfs-dn.service

可加入如下配置,