如何部署Oracle Linux 10.x Apache Hadoop 2.6.0 HDFS集群?

- By : Will

- Category : Apache-Hadoop

1 基础知识

2 最佳实践

2.1 系统环境配置

2.1.1 系统信息

IP Address = 10.168.0.10[1-3]

OS = Oracle Linux 10.x x86_64

Host Name = hd0[1-3].cmdschool.org

详细的角色分布如下,

Apache Hadoop HDFS NameNode(hdfs-nn) = hd01.cmdschool.org

Apache Hadoop HDFS SecondaryNameNode(hdfs-snn) = hd02.cmdschool.org

Apache Hadoop HDFS DataNode(hdfs-snn) = hd0[1-3].cmdschool.org

2.1.1 准备操作系统

2.1.2 准备JDK环境

In hd0[1-3],

安装完成后,请使用如下命令检查JDK的安装,

java -version

命令显示如下,

java version "1.8.0_121" Java(TM) SE Runtime Environment (build 1.8.0_121-b13) Java HotSpot(TM) 64-Bit Server VM (build 25.121-b13, mixed mode)

2.1.3 安装rsync

In hd0[1-3],

dnf install -y rsync

2.1.4 下载二进制安装包

In hd0[1-3],

cd ~ wget https://archive.apache.org/dist/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz

其他版本请从以下链接下载,

https://archive.apache.org/dist/hadoop/common/

2.2 软件环境配置

2.2.1 配置名称解析

In hd0[1-3],

echo '10.168.0.101 hd01 hd01.cmdschool.org' >> /etc/hosts echo '10.168.0.102 hd02 hd02.cmdschool.org' >> /etc/hosts echo '10.168.0.103 hd03 hd03.cmdschool.org' >> /etc/hosts

注:以上仅用于测试,生产环境请使用DNS代替

2.2.2 配置管理节点到从节点的root用户公钥认证

In hd01,

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa ssh-copy-id -i ~/.ssh/id_rsa.pub root@hd01 ssh-copy-id -i ~/.ssh/id_rsa.pub root@hd02 ssh-copy-id -i ~/.ssh/id_rsa.pub root@hd03

配置完毕后,请使用如下命令测试,

ssh root@hd01 ssh root@hd02 ssh root@hd03

2.2.3 配置运行用户

In hd01,

groupadd hadoop groupadd hdfs useradd -g hdfs -G hadoop -d /var/lib/hadoop-hdfs/ hdfs ssh root@hd02 groupadd hadoop ssh root@hd02 groupadd hdfs ssh root@hd02 useradd -g hdfs -G hadoop -d /var/lib/hadoop-hdfs/ hdfs ssh root@hd03 groupadd hadoop ssh root@hd03 groupadd hdfs ssh root@hd03 useradd -g hdfs -G hadoop -d /var/lib/hadoop-hdfs/ hdfs

2.2.4 配置主到从节点的hdfs用户公钥认证

In hd01,

su - hdfs mkdir -p ~/.ssh/ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys exit ssh hd02 mkdir -p /var/lib/hadoop-hdfs/.ssh/ scp /var/lib/hadoop-hdfs/.ssh/id_rsa.pub hd02:/var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd02 chown hdfs:hdfs -R /var/lib/hadoop-hdfs/.ssh/ ssh hd02 chmod 0600 /var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd03 mkdir -p /var/lib/hadoop-hdfs/.ssh/ scp /var/lib/hadoop-hdfs/.ssh/id_rsa.pub hd03:/var/lib/hadoop-hdfs/.ssh/authorized_keys ssh hd03 chown hdfs:hdfs -R /var/lib/hadoop-hdfs/.ssh/ ssh hd03 chmod 0600 /var/lib/hadoop-hdfs/.ssh/authorized_keys

配置完成或,请务必使用如下命令测试公钥认证,

su - hdfs ssh hd01 ssh hd02 ssh hd03

注:以上,如果不用输入密码即可完成登录,则配置完成。

2.2.7 配置从到主节点的hdfs用户公钥认证

In hd01,

scp /var/lib/hadoop-hdfs/.ssh/id_rsa hd02:/var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd02 chown hdfs:hdfs /var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd02 chmod 0600 /var/lib/hadoop-hdfs/.ssh/id_rsa scp /var/lib/hadoop-hdfs/.ssh/id_rsa hd03:/var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd03 chown hdfs:hdfs /var/lib/hadoop-hdfs/.ssh/id_rsa ssh hd03 chmod 0600 /var/lib/hadoop-hdfs/.ssh/id_rsa

配置完成或,务必使用如下命令测试公钥认证,

In hd0[1-3],

su - hdfs ssh hd01

注:以上,如果不用输入密码即可完成登录,则配置完成。

2.3 部署Apache Hadoop软件包

In hd0[1-3],

2.3.1 解压安装包

cd ~ tar -xf hadoop-2.6.0.tar.gz

2.3.2 部署软件包

cd ~ mv hadoop-2.6.0 /usr/ chown hdfs:hdfs -R /usr/hadoop-2.6.0/ chmod 775 -R /usr/hadoop-2.6.0/ ln -s /usr/hadoop-2.6.0/etc/hadoop /etc/hadoop

软件部署之后,你还需要为软件设置环境变量,

vim /etc/profile.d/hadoop.sh

加入如下配置,

export HADOOP_HOME=/usr/hadoop-2.6.0

export HADOOP_PREFIX=${HADOOP_HOME}

export HADOOP_YARN_HOME=${HADOOP_HOME}

export PATH=${HADOOP_HOME}/bin:$PATH

export PATH=${HADOOP_HOME}/sbin:$PATH

export HADOOP_CONF_DIR=/etc/hadoop

export HADOOP_LOG_DIR=/var/log/hadoop-hdfs

export HADOOP_PID_DIR=/var/run/hadoop-hdfs

export HADOOP_MASTER=hd01:${HADOOP_HOME}

export HADOOP_IDENT_STRING=$USER

export HADOOP_NICENESS=0

以上的配置解析如下,请重点理解第九行,

– 第一行声明Hadoop程序的安装目录

– 第二行继承“HADOOP_HOME”的声明

– 第三行继承“HADOOP_HOME”的声明,指示YARN的位置

– 第四行声明执行文件的位置(Hadoop的bin)

– 第五行声明执行文件的位置(Hadoop的sbin)

– 第六行声明Hadoop配置文件的位置

– 第七行声明Hadoop日志文件位置

– 第八行声明HadoopPDI文件的位置

– 第九行声明非主服务器使用rsync从hd01的“/usr/hadoop-2.6.0”目录同步配置(此配置是重点)

– 第十行声明运行的用户(“$USER即指当前用户”)

– 第十一行声明进程的优先级别,默认值“0”

根据声明的目录配置权限,

mkdir -p /var/log/hadoop-hdfs /var/run/hadoop-hdfs chown hdfs:hdfs /var/log/hadoop-hdfs /var/run/hadoop-hdfs chmod 775 /var/log/hadoop-hdfs /var/run/hadoop-hdfs

然后,你需要导入环境变量

source /etc/profile.d/hadoop.sh

2.3.3 测试安装部署

hadoop version

可见如下输出,

Hadoop 2.6.0 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1 Compiled by jenkins on 2014-11-13T21:10Z Compiled with protoc 2.5.0 From source with checksum 18e43357c8f927c0695f1e9522859d6a This command was run using /usr/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0.jar

2.4 配置名称节点(主节点)

In hd01

2.4.1 定义core-site.xml配置文件

cp /etc/hadoop/core-site.xml /etc/hadoop/core-site.xml.default vim /etc/hadoop/core-site.xml

修改如下配置,

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hd01:9000</value>

</property>

</configuration>

注:参数“”定义“hd01”为名称节点

2.4.2 定义hdfs-site.xml配置文件

cp /etc/hadoop/hdfs-site.xml /etc/hadoop/hdfs-site.xml.default vim /etc/hadoop/hdfs-site.xml

修改如下配置,

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/dfs/nn</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>/data/dfs/snn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/dfs/dn</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

注:

– 参数“dfs.namenode.name.dir”定义名称节点的存储位置

– 参数“dfs.namenode.checkpoint.dir”定义第二名称节点的存储位置

– 参数“dfs.namenode.checkpoint.dir”依赖配置文件“/etc/hadoop/hadoop-metrics2.properties”

– 参数“dfs.datanode.data.dir”定义数据节点的存储位置

– 参数“dfs.replication”定义块数据复制的份数

另外,你需要根据配置文件定义数据存储目录,

mkdir -p /data/dfs/nn chown hdfs:hadoop /data/dfs/nn chmod 775 /data/dfs/nn

另外,我们更建议你你授权上一级目录并让“nn”目录自动创建,

mkdir -p /data/dfs chown hdfs:hadoop /data/dfs chmod 775 /data/dfs

2.4.3 格式化名称节点

su - hdfs -c 'hadoop namenode -format'

可见如下提示信息,

DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. 26/02/10 16:25:23 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = hd01/10.168.0.101 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.6.0 STARTUP_MSG: classpath = /etc/hadoop:/usr/hadoop-2.6.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/asm-3.2.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jettison-1.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/gson-2.2.4.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/guava-11.0.2.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/junit-4.11.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-net-3.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-io-2.4.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/paranamer-2.3.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-el-1.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/xz-1.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/avro-1.7.4.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.0/share/hadoop/common/lib/activation-1.1.jar:/usr/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0-tests.jar:/usr/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/common/hadoop-nfs-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/usr/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/asm-3.2.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/guice-3.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/xz-1.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/lib/activation-1.1.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/usr/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/usr/hadoop-2.6.0/contrib/capacity-scheduler/*.jar:/usr/hadoop-2.6.0/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1; compiled by 'jenkins' on 2014-11-13T21:10Z STARTUP_MSG: java = 1.8.0_121 ************************************************************/ 26/02/10 16:25:23 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 26/02/10 16:25:23 INFO namenode.NameNode: createNameNode [-format] 26/02/10 16:25:24 WARN common.Util: Path /data/dfs/nn should be specified as a URI in configuration files. Please update hdfs configuration. 26/02/10 16:25:24 WARN common.Util: Path /data/dfs/nn should be specified as a URI in configuration files. Please update hdfs configuration. Formatting using clusterid: CID-7f34804d-f364-46e3-9f9e-dfdcef5dcfe3 26/02/10 16:25:24 INFO namenode.FSNamesystem: No KeyProvider found. 26/02/10 16:25:24 INFO namenode.FSNamesystem: fsLock is fair:true 26/02/10 16:25:24 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 26/02/10 16:25:24 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 26/02/10 16:25:24 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 26/02/10 16:25:24 INFO blockmanagement.BlockManager: The block deletion will start around 2026 Feb 10 16:25:24 26/02/10 16:25:24 INFO util.GSet: Computing capacity for map BlocksMap 26/02/10 16:25:24 INFO util.GSet: VM type = 64-bit 26/02/10 16:25:24 INFO util.GSet: 2.0% max memory 895.5 MB = 17.9 MB 26/02/10 16:25:24 INFO util.GSet: capacity = 2^21 = 2097152 entries 26/02/10 16:25:24 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 26/02/10 16:25:24 INFO blockmanagement.BlockManager: defaultReplication = 3 26/02/10 16:25:24 INFO blockmanagement.BlockManager: maxReplication = 512 26/02/10 16:25:24 INFO blockmanagement.BlockManager: minReplication = 1 26/02/10 16:25:24 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 26/02/10 16:25:24 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false 26/02/10 16:25:24 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 26/02/10 16:25:24 INFO blockmanagement.BlockManager: encryptDataTransfer = false 26/02/10 16:25:24 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 26/02/10 16:25:24 INFO namenode.FSNamesystem: fsOwner = hdfs (auth:SIMPLE) 26/02/10 16:25:24 INFO namenode.FSNamesystem: supergroup = supergroup 26/02/10 16:25:24 INFO namenode.FSNamesystem: isPermissionEnabled = true 26/02/10 16:25:24 INFO namenode.FSNamesystem: HA Enabled: false 26/02/10 16:25:24 INFO namenode.FSNamesystem: Append Enabled: true 26/02/10 16:25:25 INFO util.GSet: Computing capacity for map INodeMap 26/02/10 16:25:25 INFO util.GSet: VM type = 64-bit 26/02/10 16:25:25 INFO util.GSet: 1.0% max memory 895.5 MB = 9.0 MB 26/02/10 16:25:25 INFO util.GSet: capacity = 2^20 = 1048576 entries 26/02/10 16:25:25 INFO namenode.NameNode: Caching file names occuring more than 10 times 26/02/10 16:25:25 INFO util.GSet: Computing capacity for map cachedBlocks 26/02/10 16:25:25 INFO util.GSet: VM type = 64-bit 26/02/10 16:25:25 INFO util.GSet: 0.25% max memory 895.5 MB = 2.2 MB 26/02/10 16:25:25 INFO util.GSet: capacity = 2^18 = 262144 entries 26/02/10 16:25:25 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 26/02/10 16:25:25 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 26/02/10 16:25:25 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 26/02/10 16:25:25 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 26/02/10 16:25:25 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 26/02/10 16:25:25 INFO util.GSet: Computing capacity for map NameNodeRetryCache 26/02/10 16:25:25 INFO util.GSet: VM type = 64-bit 26/02/10 16:25:25 INFO util.GSet: 0.029999999329447746% max memory 895.5 MB = 275.1 KB 26/02/10 16:25:25 INFO util.GSet: capacity = 2^15 = 32768 entries 26/02/10 16:25:25 INFO namenode.NNConf: ACLs enabled? false 26/02/10 16:25:25 INFO namenode.NNConf: XAttrs enabled? true 26/02/10 16:25:25 INFO namenode.NNConf: Maximum size of an xattr: 16384 26/02/10 16:25:25 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1177556838-10.168.0.101-1770711925562 26/02/10 16:25:25 INFO common.Storage: Storage directory /data/dfs/nn has been successfully formatted. 26/02/10 16:25:25 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 26/02/10 16:25:25 INFO util.ExitUtil: Exiting with status 0 26/02/10 16:25:25 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hd01/10.168.0.101 ************************************************************/

注:“[…]”表示省略

2.4.4 启动守护进程

su - hdfs -c '/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh start namenode'

可见如下输出,

rsync from hd01:/usr/hadoop-2.6.0 starting namenode, logging to /var/log/hadoop-hdfs/hadoop-hdfs-namenode-hd01.cmdschool.org.out

启动后,你可以使用如下命令查看启动的进程,

pgrep -u hdfs java -a

可见如下输出,

2667 /usr/java/jdk1.8.0_121/bin/java -Dproc_namenode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop-hdfs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/usr/hadoop-2.6.0 -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,console -Djava.library.path=/usr/hadoop-2.6.0/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop-hdfs -Dhadoop.log.file=hadoop-hdfs-namenode-hd01.cmdschool.org.log -Dhadoop.home.dir=/usr/hadoop-2.6.0 -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/usr/hadoop-2.6.0/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.namenode.NameNode

可使用如下命令确认程序的启动,

ss -antp | grep -f <(pgrep -u hdfs java)

可见如下输出,

LISTEN 0 128 0.0.0.0:50070 0.0.0.0:* users:(("java",pid=2667,fd=187))

LISTEN 0 128 10.10.200.216:9000 0.0.0.0:* users:(("java",pid=2667,fd=206))

测试到这里,请使用以下命令停止服务,

su - hdfs -c '/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh stop namenode'

2.4.5 配置服务控制脚本

vim /usr/lib/systemd/system/hdfs-nn.service

可加入如下配置,

[Unit] Description=Apache HDFS namenode manager Wants=network.target Before=network.target After=network-pre.target Documentation=https://hadoop.apache.org/docs/ [Service] Type=forking User=hdfs Group=hdfs Environment="JAVA_HOME=/usr/java/jdk1.8.0_121" Environment="HADOOP_HOME=/usr/hadoop-2.6.0" Environment="HADOOP_PREFIX=/usr/hadoop-2.6.0" Environment="HADOOP_YARN_HOME=/usr/hadoop-2.6.0" Environment="HADOOP_CONF_DIR=/etc/hadoop" Environment="HADOOP_LOG_DIR=/var/log/hadoop-hdfs" Environment="HADOOP_PID_DIR=/var/run/hadoop-hdfs" Environment="HADOOP_MASTER=hd01:/usr/hadoop-2.6.0" Environment="HADOOP_IDENT_STRING=hdfs" Environment="HADOOP_NICENESS=0" ExecStartPre=+/bin/sh -c 'mkdir -p /var/run/hadoop-hdfs;chown hdfs:hdfs /var/run/hadoop-hdfs;chmod 775 /var/run/hadoop-hdfs' ExecStartPre=+/bin/sh -c 'mkdir -p /var/log/hadoop-hdfs;chown hdfs:hdfs /var/log/hadoop-hdfs;chmod 775 /var/log/hadoop-hdfs' ExecStartPre=+/bin/sh -c 'mkdir -p /data/dfs/nn;chown hdfs:hadoop /data/dfs/nn;chmod 775 /data/dfs/nn' ExecStart=/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh start namenode ExecStop=/usr/bin/kill -SIGINT $MAINPID PIDFile=/var/run/hadoop-hdfs/hadoop-hdfs-namenode.pid LimitNOFILE=65535 LimitCORE=infinity Restart=on-failure RestartSec=5 TimeoutSec=300 KillMode=process IgnoreSIGPIPE=no SendSIGKILL=no [Install] WantedBy=multi-user.target

修改完脚本后,你需要使用如下命令重载服务,

systemctl daemon-reload

启动服务并设置自启动,

systemctl start hdfs-nn.service systemctl enable hdfs-nn.service

2.4.6 开放节点的端口

firewall-cmd --permanent --add-port 50070/tcp --add-port 9000/tcp firewall-cmd --reload firewall-cmd --list-all

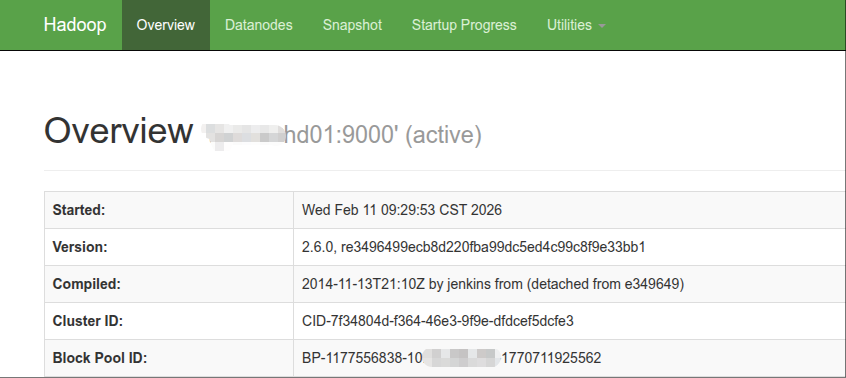

3.4.7 浏览器测试

In Windows Client

http://10.168.0.101:50070/

可见如下显示,

2.5 配置第二名称节点

2.5.1 创建数据存储目录

In hd02

mkdir -p /data/dfs/snn/ chown hdfs:hadoop /data/dfs/snn/ chmod 775 /data/dfs/snn/

另外,我们更建议你你授权上一级目录并让“snn”目录自动创建,

mkdir -p /data/dfs chown hdfs:hadoop /data/dfs chmod 775 /data/dfs

2.5.2 启动守护进程

In hd02

su - hdfs -c '/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh start secondarynamenode'

由于声明环境变量“HADOOP_MASTER”的关系,主节点配置会自动同步到本机,另外可见如下输出,

rsync from hd01:/usr/hadoop-2.6.0 starting secondarynamenode, logging to /var/log/hadoop-hdfs/hadoop-hdfs-secondarynamenode-hd02.cmdschool.org.out

启动后,你可以使用如下命令查看启动的进程,

pgrep -u hdfs java -a

可见如下输出,

21487 /usr/java/jdk1.8.0_121/bin/java -Dproc_secondarynamenode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop-hdfs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/usr/hadoop-2.6.0 -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,console -Djava.library.path=/usr/hadoop-2.6.0/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop-hdfs -Dhadoop.log.file=hadoop-hdfs-secondarynamenode-hd02.cmdschool.org.log -Dhadoop.home.dir=/usr/hadoop-2.6.0 -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/usr/hadoop-2.6.0/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode

可使用如下命令确认程序的启动,

ss -antp | grep -f <(pgrep -u hdfs java)

可见如下输出,

LISTEN 0 128 0.0.0.0:50090 0.0.0.0:* users:(("java",pid=21487,fd=200))

测试到这里,请使用以下命令停止服务,

su - hdfs -c '/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh stop secondarynamenode'

2.5.3 配置服务控制脚本

In hd02

vim /usr/lib/systemd/system/hdfs-snn.service

可加入如下配置,

[Unit] Description=Apache HDFS secondarynamenode manager Wants=network.target Before=network.target After=network-pre.target Documentation=https://hadoop.apache.org/docs/ [Service] Type=forking User=hdfs Group=hdfs Environment="JAVA_HOME=/usr/java/jdk1.8.0_121" Environment="HADOOP_HOME=/usr/hadoop-2.6.0" Environment="HADOOP_PREFIX=/usr/hadoop-2.6.0" Environment="HADOOP_YARN_HOME=/usr/hadoop-2.6.0" Environment="HADOOP_CONF_DIR=/etc/hadoop" Environment="HADOOP_LOG_DIR=/var/log/hadoop-hdfs" Environment="HADOOP_PID_DIR=/var/run/hadoop-hdfs" Environment="HADOOP_MASTER=hd01:/usr/hadoop-2.6.0" Environment="HADOOP_IDENT_STRING=hdfs" Environment="HADOOP_NICENESS=0" ExecStartPre=+/bin/sh -c 'mkdir -p /var/run/hadoop-hdfs;chown hdfs:hdfs /var/run/hadoop-hdfs;chmod 775 /var/run/hadoop-hdfs' ExecStartPre=+/bin/sh -c 'mkdir -p /var/log/hadoop-hdfs;chown hdfs:hdfs /var/log/hadoop-hdfs;chmod 775 /var/log/hadoop-hdfs' ExecStartPre=+/bin/sh -c 'mkdir -p /data/dfs/snn;chown hdfs:hadoop /data/dfs/snn;chmod 775 /data/dfs/snn' ExecStart=/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh start secondarynamenode ExecStop=/usr/bin/kill -SIGINT $MAINPID PIDFile=/var/run/hadoop-hdfs/hadoop-hdfs-secondarynamenode.pid LimitNOFILE=65535 LimitCORE=infinity Restart=on-failure RestartSec=5 TimeoutSec=300 KillMode=process IgnoreSIGPIPE=no SendSIGKILL=no [Install] WantedBy=multi-user.target

修改完脚本后,你需要使用如下命令重载服务,

systemctl daemon-reload

启动服务并设置自启动,

systemctl start hdfs-snn.service systemctl enable hdfs-snn.service

2.5.4 开放节点的端口

In hd02

firewall-cmd --permanent --add-port 50090/tcp firewall-cmd --reload firewall-cmd --list-all

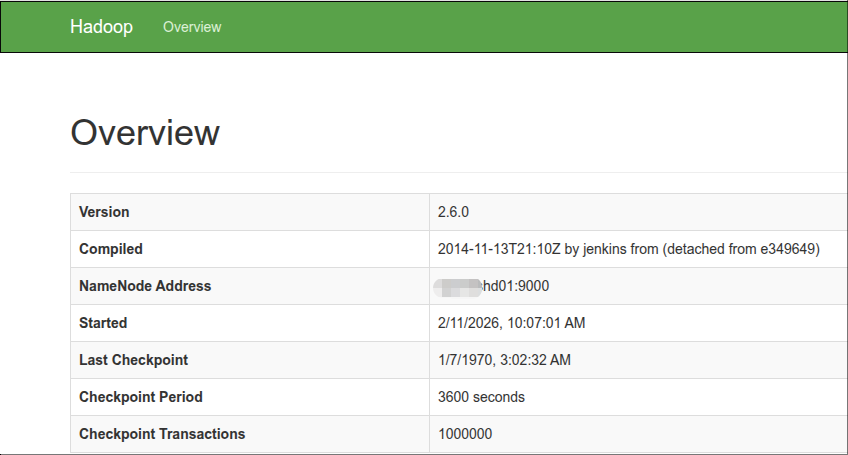

2.5.5 浏览器测试服务

In Windows Client

http://10.168.0.102:50090

可见如下显示,

2.6 配置数据节点

2.6.1 创建数据存储目录

In hd0[1-3]

mkdir -p /data/dfs/dn/ chown hdfs:hadoop /data/dfs/dn/ chmod 775 /data/dfs/dn/

另外,我们更建议你你授权上一级目录并让“dn”目录自动创建,

mkdir -p /data/dfs chown hdfs:hadoop /data/dfs chmod 775 /data/dfs

2.6.2 启动守护进程

In hd0[3-5]

su - hdfs -c '/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh start datanode'

由于声明环境变量“HADOOP_MASTER”的关系,主节点配置会自动同步到本机,另外可见如下输出,

rsync from hd01:/usr/hadoop-2.6.0 starting datanode, logging to /var/log/hadoop-hdfs/hadoop-hdfs-datanode-hd03.cmdschool.org.out

启动后,你可以使用如下命令查看启动的进程,

pgrep -u hdfs java -a

可见如下输出,

21367 /usr/java/jdk1.8.0_121/bin/java -Dproc_datanode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop-hdfs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/usr/hadoop-2.6.0 -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,console -Djava.library.path=/usr/hadoop-2.6.0/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/var/log/hadoop-hdfs -Dhadoop.log.file=hadoop-hdfs-datanode-hd03.cmdschool.org.log -Dhadoop.home.dir=/usr/hadoop-2.6.0 -Dhadoop.id.str=hdfs -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/usr/hadoop-2.6.0/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -server -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.datanode.DataNode

可使用如下命令确认程序的启动,

ss -antp | grep -f <(pgrep -u hdfs java)

可见如下输出,

LISTEN 0 128 0.0.0.0:50075 0.0.0.0:* users:(("java",pid=21367,fd=192))

LISTEN 0 50 0.0.0.0:50010 0.0.0.0:* users:(("java",pid=21367,fd=188))

LISTEN 0 128 0.0.0.0:50020 0.0.0.0:* users:(("java",pid=21367,fd=206))

ESTAB 0 0 10.168.0.103:47814 10.168.0.101:9000 users:(("java",pid=21367,fd=217))

测试到这里,请使用以下命令停止服务,

su - hdfs -c '/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh stop datanode'

2.6.3 配置服务控制脚本

In hd0[1-3]

vim /usr/lib/systemd/system/hdfs-dn.service

可加入如下配置,

[Unit] Description=Apache HDFS datanode manager Wants=network.target Before=network.target After=network-pre.target Documentation=https://hadoop.apache.org/docs/ [Service] Type=forking User=hdfs Group=hdfs Environment="JAVA_HOME=/usr/java/jdk1.8.0_121" Environment="HADOOP_HOME=/usr/hadoop-2.6.0" Environment="HADOOP_PREFIX=/usr/hadoop-2.6.0" Environment="HADOOP_YARN_HOME=/usr/hadoop-2.6.0" Environment="HADOOP_CONF_DIR=/etc/hadoop" Environment="HADOOP_LOG_DIR=/var/log/hadoop-hdfs" Environment="HADOOP_PID_DIR=/var/run/hadoop-hdfs" Environment="HADOOP_MASTER=hd01:/usr/hadoop-2.6.0" Environment="HADOOP_IDENT_STRING=hdfs" Environment="HADOOP_NICENESS=0" ExecStartPre=+/bin/sh -c 'mkdir -p /var/run/hadoop-hdfs;chown hdfs:hdfs /var/run/hadoop-hdfs;chmod 775 /var/run/hadoop-hdfs' ExecStartPre=+/bin/sh -c 'mkdir -p /var/log/hadoop-hdfs;chown hdfs:hdfs /var/log/hadoop-hdfs;chmod 775 /var/log/hadoop-hdfs' ExecStartPre=+/bin/sh -c 'mkdir -p /data/dfs/dn;chown hdfs:hadoop /data/dfs/dn;chmod 775 /data/dfs/dn' ExecStart=/usr/hadoop-2.6.0/sbin/hadoop-daemon.sh start datanode ExecStop=/usr/bin/kill -SIGINT $MAINPID PIDFile=/var/run/hadoop-hdfs/hadoop-hdfs-datanode.pid LimitNOFILE=65535 LimitCORE=infinity Restart=on-failure RestartSec=5 TimeoutSec=300 KillMode=process IgnoreSIGPIPE=no SendSIGKILL=no [Install] WantedBy=multi-user.target

修改完脚本后,你需要使用如下命令重载服务,

systemctl daemon-reload

启动服务并设置自启动,

systemctl start hdfs-dn.service systemctl enable hdfs-dn.service

2.6.4 开放节点的端口

In hd0[1-3]

firewall-cmd --permanent --add-port 50010/tcp --add-port 50020/tcp --add-port 50075/tcp firewall-cmd --reload firewall-cmd --list-all

2.7 测试HDFS

In hd01

2.8 使用HDFS的Web服务

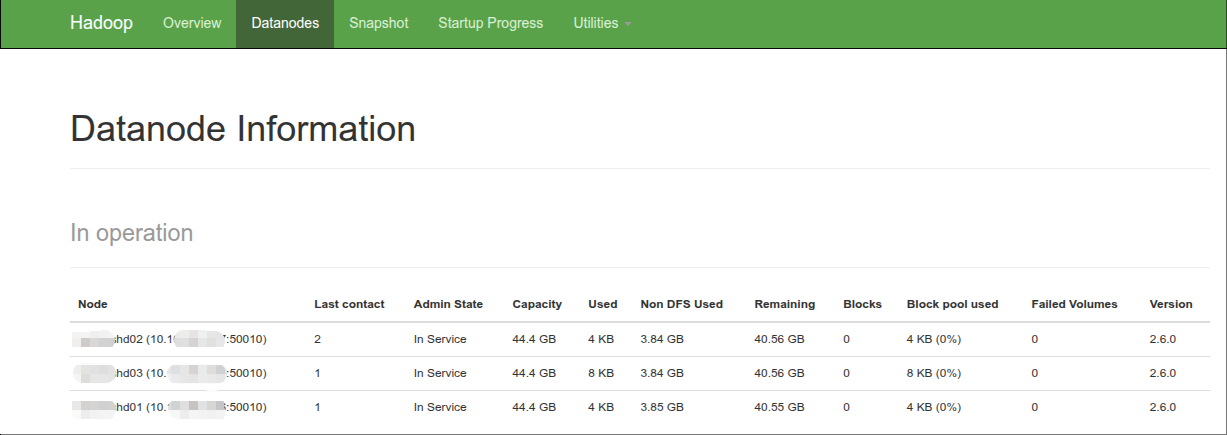

2.8.1 查看数据节点信息

http://10.168.0.101:50070/dfshealth.html#tab-datanode

界面显示如下,

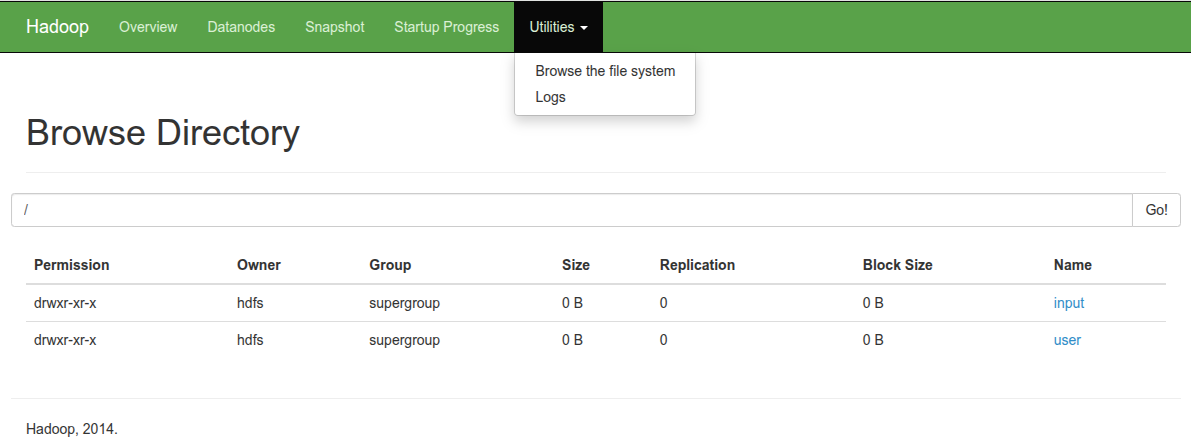

2.8.2 浏览文件系统

http://10.168.0.101:50070/explorer.html#

界面显示如下,

3 配置YARN

由于配置YARN不是本章节的重点,所有有需求请参阅以下文档,

https://www.cmdschool.org/archives/8313

参阅文档

==============

https://www.cmdschool.org/archives/31618

https://www.cmdschool.org/archives/5579

没有评论